Figure 1: Question and answer, paraphrased, from the workshop.

TL;DR

This blog post accompanies the R-Ladies Philly workshop on Nov 11, 2021 (recording on YouTube), where we live coded use of the usethis, devtools, testthat, and covr packages to automate R package testing. This post complements the recording through highlighting key points, rather than listing all actions.

Workshop recording

Abstract

In this workshop, Shannon Pileggi and Gordon Shotwell discuss how to get started with unit testing in R, which is formal automated testing of functions within packages. We demonstrate handy functions in usethis and devtools, strategies for writing tests, debugging techniques, and broad concepts in function writing that facilitate a smoother testing process.

This workshop picks up exactly where we left our little ralph (aka R-Ladies Philly) package one year ago with “Your first R package in 1 hour: Tools that make R package development easy”. Participants will get the most out of this workshop if they review those materials in advance, or if they are already familiar with building R packages with devtools and usethis.

Packages

This material was developed using:

| Software / package | Version |

|---|---|

| R | 4.1.1 |

| RStudio | 351 “Ghost Orchid” |

usethis |

2.1.2 |

devtools |

2.4.2 |

testthat |

3.1.0 |

covr |

3.5.1 |

broom |

0.7.9 |

glue |

1.4.2 |

magrittr |

2.0.1 |

purrr |

0.3.4 |

rlang |

0.4.12 |

Tool kit

This table contains the general process functions used in this workshop. Single usage functions only need to be used one time in the development process; multiple usage functions are executed as needed.

| Usage | Function | Purpose |

|---|---|---|

| Single | usethis::use_testthat() |

initialize testing infrastructure |

| Multiple | usethis::use_test() |

create a new test file |

devtools::test() |

execute and evaluate all tests in package | |

covr::report() |

reports test coverage | |

browser() |

debugging: interrupt and inspect function execution | |

devtools::check() |

build package locally and check | |

devtools::load_all() |

load functions in 📂 R/ into memory |

|

usethis::use_r("function") |

create R script for function | |

usethis::use_package("package") |

add package dependency | |

devtools::document() |

build and add documentation |

Other resources:

R package development cheat sheet

Ch. 12 Testing in R packages by Hadley Wickham and Jenny Bryan

Ch. 8.2 Signalling conditions in Advanced R by Hadley Wickham

testthat package documentation

{usethis} user interface functions

covr package documentation

Building tidy tools workshop at

rstudio::conf(2019)by Hadley Wickham and Charlotte WickhamDebugging

RStudio blog post by Jonathan McPherson Debugging with the RStudio IDE

Ch 22 Debugging in Advanced R by Hadley Wickham

Indrajeet Patil’s curated list of awesome tools to assist R package development.

Why unit test

If you write R functions, then you already test your code. You write a function, see if it works, and iterate on the function until you achieve your desired result. In the package development work flow, it looks something like this:

Figure 2: Workflow cycle of function development without automated testing, from 2019 Building Tidy Tools workshop.

This process is where most of us likely start, and possibly even stay for a while. But it can be tedious, time consuming, and error-prone to manually check all possible combinations of function inputs and arguments.

Instead, we can automate tests with testthat in a new workflow.

Figure 3: Workflow cycle of function development when getting started with automated testing, from 2019 Building Tidy Tools workshop.

And once you trust and get comfortable with the tests you have set up, you can speed up the development process even more by removing the reload code step.

Figure 4: Workflow cycle of function development when comfortable with automated testing, from 2019 Building Tidy Tools workshop.

Like anything in programming, there is an up-front time investment in learning this framework and process, but with potentially significant downstream time savings.

Getting started

This post picks up exactly where we left the ralph package in Your first R package in 1 hour in November 2020. In order to keep that as a stand-alone resource, I created a second repo called ralphGetsTested for this workshop, which was a copy of ralph as we left it.

- If you want to follow along with the unit testing steps and practice yourself, fork and clone the

ralphrepo.

usethis::create_from_github("shannonpileggi/ralph")

- If you want to see the repository as it stood at the conclusion of the unit testing workshop, fork and clone the

ralphGetsTestedrepo.

usethis::create_from_github("shannonpileggi/ralphGetsTested")

Keyboard shortcuts

Ctrl + Sfor save fileCtrl + Shift + Lfordevtools::load_all()Ctrl + Shift + F10to restart RCtrl + Shift + Tfordevtools::test()

(9:00) Status of ralph

The little ralph package has a single, untested function that computes a correlation and returns tidy results.

compute_corr <- function(data, var1, var2){

# compute correlation ----

stats::cor.test(

x = data %>% dplyr::pull({{var1}}),

y = data %>% dplyr::pull({{var2}})

) %>%

# tidy up results ----

broom::tidy() %>%

# retain and rename relevant bits ----

dplyr::select(

correlation = .data$estimate,

pval = .data$p.value

)

}

Here is an example execution:

compute_corr(data = faithful, var1 = eruptions, var2 = waiting)

# A tibble: 1 x 2

correlation pval

<dbl> <dbl>

1 0.901 8.13e-100(16:30) Test set up

To get your package ready for automated testing, submit

usethis::use_testthat()

From R Packages Ch 12 Testing, this does three things:

Creates a

tests/testthatdirectory.Adds

testthatto theSuggestsfield in theDESCRIPTION.Creates a file

tests/testthat.Rthat runs all your tests when you executedevtools::check().

Figure 5: (17:54): DESCRIPTION and console output after submitting usethis::use_testthat().

Note that the edition of testthat is specified to 3, which departs a bit from previous versions both in function scopes and execution.

(25:10) First test

To create our first test file, submit

usethis::use_test("compute_corr")

. Here, we name this file the same as our function name. This creates a new file under tests -> testthat named test-compute_corr.R, and the file pre-populates with an example test that we can replace.

For our first test, we create an object that contains the expected results of an example function execution, and then we assess the correctness of the output using the testthat::expect_ functions.

We name the overall test chunk assess_compute_corr - you can name this whatever would be useful for you to read in a testing log. In this test, we evaluate if the function returns the correct class of object, in this case, a data.frame.

test_that("assess_compute_corr", {

expected <- compute_corr(data = faithful, var1 = eruptions, var2 = waiting)

expect_s3_class(expected, "data.frame")

})

Now there are two ways to execute the test.

The

Run Testsbutton ()on the top right hand side of the testing script executes the tests in this script only (not all tests in the package), and excutes this in a fresh R environment.

Submitting

devtools::test()(orCtrl + Shift + T) executes all tests in the package in your global environment.

Here, we submit our first test with the Run Tests button, and it passes! 🎉

![`test-compute_corr.R` shows 1 expectation in `test_that(...)`; `Build` pane on top right hand side shows [FAIL 0 | WARN 0 | SKIP 0 | Pass 1].](img/test-expect-class.png)

Figure 6: (29:23) Passing result after submitting the first test with the Run Tests button.

Submitting devtools::check() will also execute all tests in the package, and you will see an error in the log if the test fails.

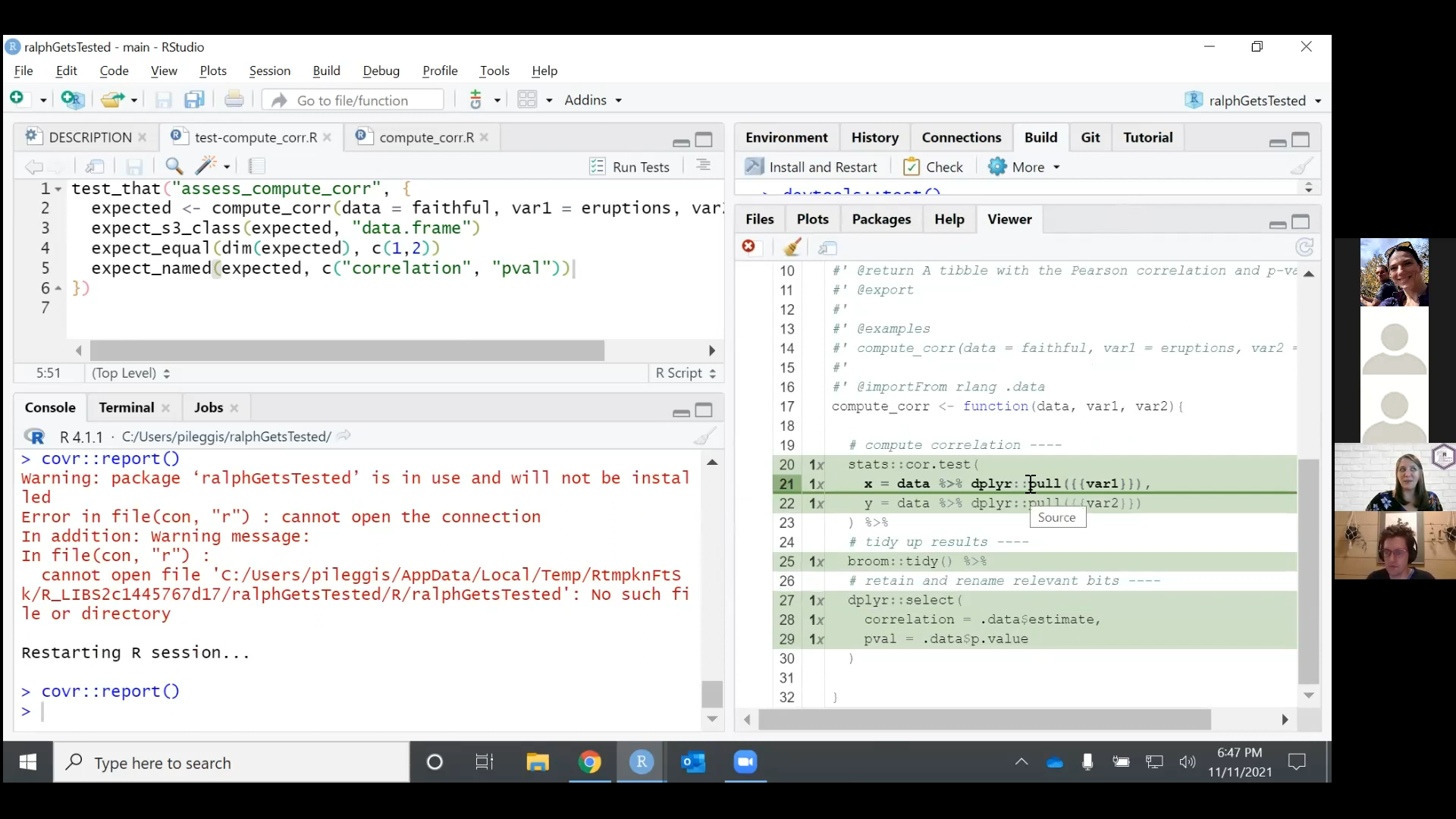

(36:08) Test coverage

Now we have added a total of three tests, and we examine our test coverage with

covr::report()

This function requires the package to not be loaded, so restart R (Ctrl + Shift + F10) prior to execution.

![`test-compute_corr.R` shows 3 expectations in `test_that(...)`; `Build` pane on top right hand side shows [FAIL 0 | WARN 0 | SKIP 0 | Pass 3]; `Viewer` panel on bottom right hand side shows 100% test coverage.](img/covr-report.png)

Figure 7: (37:05) covr::report() creates output in viewer showing the percent of code covered by the tests.

The percent coverage evaluates the percent of code that was ever executed during through the test_that() functions. In the viewer panel, click on Files and then R/compute_corr.R. The 1x on the left hand side counts how many times that line of code has been executed when you ran your test suite; in our case, each line of code was executed one time.

Figure 8: (37:19) covr::report() shows which lines of code were executed, and how many times, as a result of your tests.

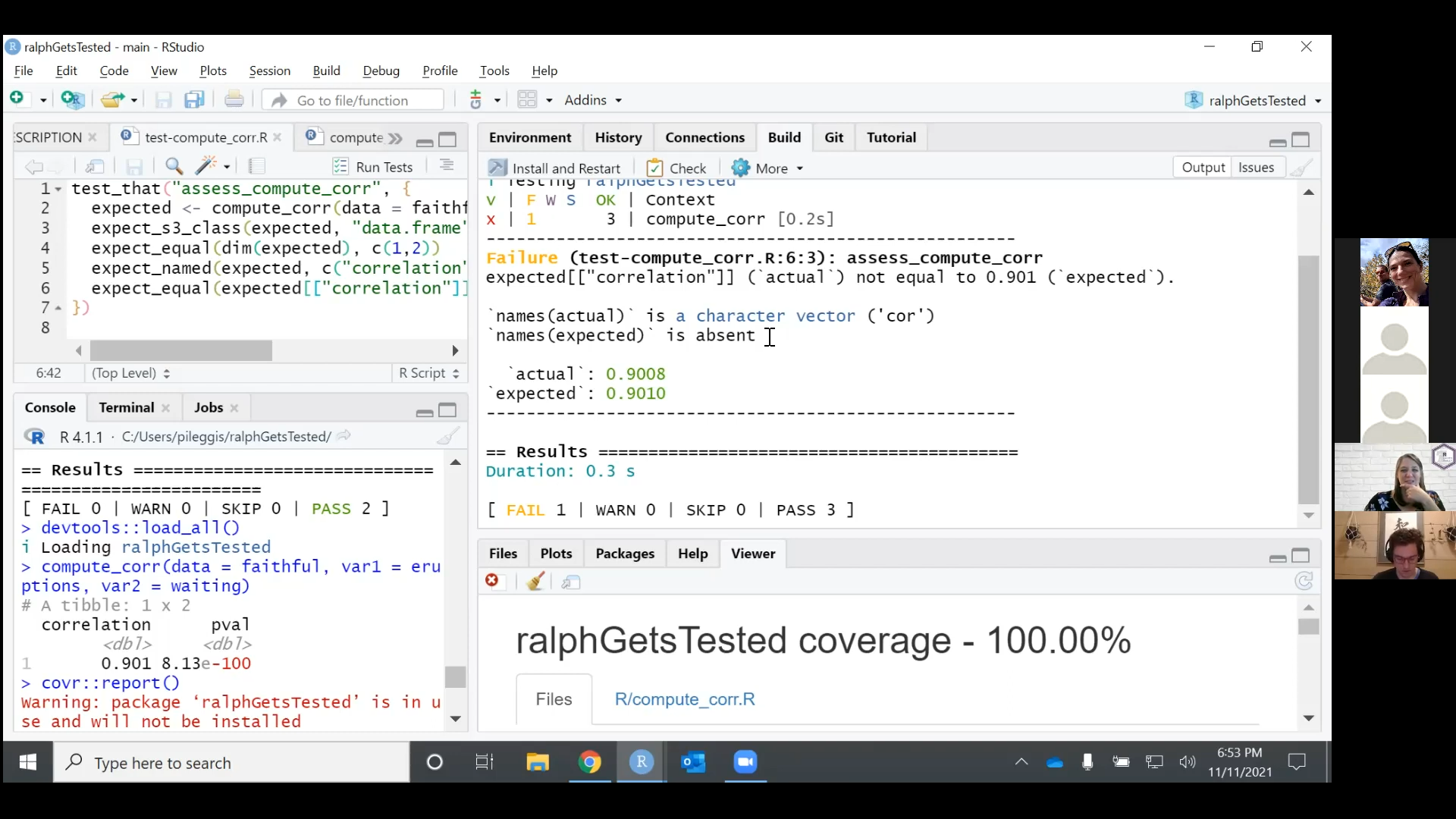

(41:27) Debugging

At this point, our tests consist of the following:

and we executed our tests with Ctrl + Shift + T.

Figure 9: (42:18) Our first test failure.

Now, we see our first failed test that we need to debug, which was triggered by expect_equal().

We first demonstrate some debugging in the console, where we reveal that the correlation column of the expected tibble has a "names" attribute of "cor".

str(expected)

tibble [1 x 2] (S3: tbl_df/tbl/data.frame)

$ correlation: Named num 0.901

..- attr(*, "names")= chr "cor"

$ pval : num 8.13e-100Next, we demonstrate an alternative way of arriving at this through use of the browser() function. To do so,

Insert

browser()into the source of your function.Load your functions with

devtools::load_all()(Ctrl + Shift + L).Execute the function.

![In compute_corr.R source script, we see `browser()` is highlighted in yellow. In console, instead of `>`, we see `Browse[1]>`. We also see a new environment window.](img/browser-1.png)

Figure 10: (46:31) Now we have entered browser mode.

Here, this allows you to step into your function with arguments exactly as called. The function is run in a fresh environment, and we can execute the function line by line to see what is happening. In addition, we can see objects as they are evaluated in the environment.

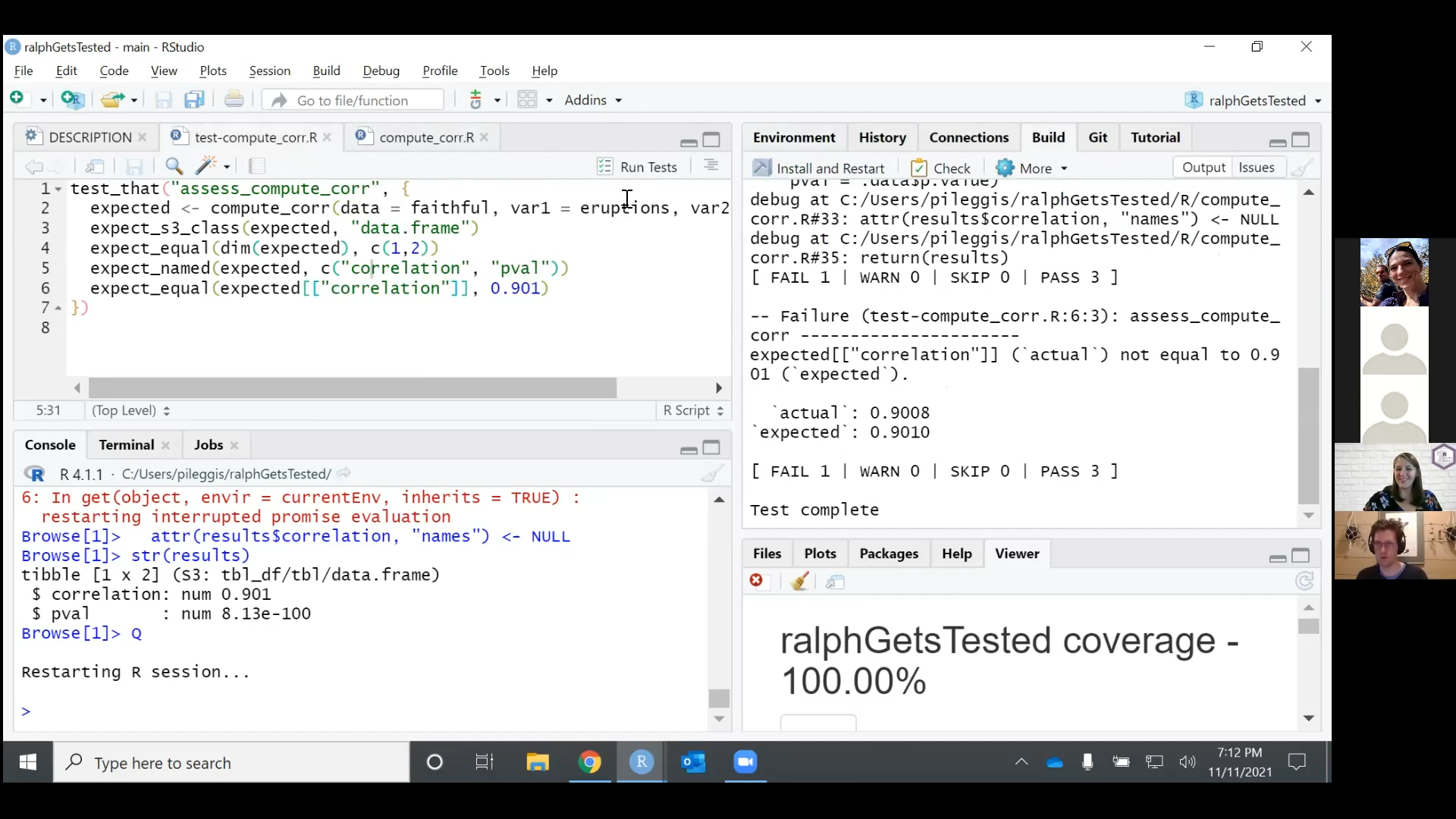

To resolve the issue:

Stop browser mode with the red

Stopsquare on the console,Modify the source of the function as shown.

compute_corr <- function(data, var1, var2){

# compute correlation ----

results <- stats::cor.test(

x = data %>% dplyr::pull({{var1}}),

y = data %>% dplyr::pull({{var2}})

) %>%

# tidy up results ----

broom::tidy() %>%

# retain and rename relevant bits ----

dplyr::select(

correlation = .data$estimate,

pval = .data$p.value

)

attr(results$correlation, "names") <- NULL

return(results)

}

devtools::load_all()(Ctrl + Shift + L)If needed, step into

browser()mode again to confirm or further troubleshoot.

Now, after updating the compute_corr() function to remove the names attribute, we still have failed test! 😱

Figure 11: (58:02) Now we have a new failure message. Note the debug at lines in the Build pane indicates that I forgot to remove the browser() line from the function source after our workshop break.

We can correct this by adding the tolerance argument to the expect_equal() function.

![In the `Build` pane we see [FAIL 0 | WARN 0 | SKIP 0 | Pass 4]](img/debugging-3.png)

Figure 12: (1:00:14) Four passing tests!

(1:00:20) Testing function inputs

The {testthat} package explicitly evaluates the outputs of your function. To determine if a user correctly specifies inputs to your function,

Assess the function inputs programmatically in the source of your function.

Return a signal, such as an error, if the input does not conform to expectations.

Formalize this catch in a test with functions such as

testthat::expect_error().

To check if the supplied variables actually exist in the data set, we add the following to compute_corr.R:

compute_corr <- function(data, var1, var2){

var1_chr <- rlang::as_label(rlang::ensym(var1))

var2_chr <- rlang::as_label(rlang::ensym(var2))

# alert user if variable is not in data set ----

if (!(var1_chr %in% names(data))){

#usethis::ui_stop("{var1_chr} is not in the data set.")

stop(glue::glue("{var1_chr} is not in the data set."))

}

# alert user if variable is not in data set ----

if (!(var2_chr %in% names(data))){

stop(glue::glue("{var2_chr} is not in the data set."))

}

# compute correlation ----

results <- stats::cor.test(

x = data %>% dplyr::pull({{var1}}),

y = data %>% dplyr::pull({{var2}})

) %>%

# tidy up results ----

broom::tidy() %>%

# retain and rename relevant bits ----

dplyr::select(

correlation = .data$estimate,

pval = .data$p.value

)

attr(results$correlation, "names") <- NULL

return(results)

}

You can enforce an error with

the

stop()function from base R,the

stopifnot()function in base R, discussed later at(1:32:40), orusethis::ui_stop(), which provides some additional functionality to the user including show traceback and rerun with debug, but also adds another dependency to your package.

Read Ch. 8.2 Signalling Conditions of Advanced R to learn more about messages, warnings, and errors.

When we execute the function with faulty inputs, we see our error with our handy note:

compute_corr(data = faithful, var1 = erruptions, var2 = waiting)

Error in compute_corr(data = faithful, var1 = erruptions, var2 = waiting): erruptions is not in the data set.Now we add an additional test to catch the error and resubmit devtools::test().

test_that("assess_compute_corr", {

expected <- compute_corr(data = faithful, var1 = eruptions, var2 = waiting)

expect_s3_class(expected, "data.frame")

expect_equal(dim(expected), c(1,2))

expect_named(expected, c("correlation", "pval"))

expect_equal(expected[["correlation"]], 0.901, tolerance = 0.001)

# catching errors

expect_error(compute_corr(data = faithful, var1 = erruptions, var2 = waiting))

})

![In the console we see [FAIL 0 | WARN 0 | SKIP 0 | Pass 5]](img/expect-error.png)

Figure 13: (1:09:05) devtools::test() shows in the console that we have FIVE passing tests! 🥳

Note: This introduces an additional dependency to the package through including glue or usethis in compute_corr(). Don’t forget to declare these dependencies with usethis::use_package("package-name").

(1:14:04) More

For the remainder of the time, we explore the awesome_rladies() function:

awesome_rladies <- function(v) {

sapply(v, function(x) {

if (x == 1) {

verb <- "is"

noun <- "RLady"

}

if (x > 1) {

verb <- "are"

noun <- "RLadies"

}

as.character(glue::glue("There {verb} {x} awesome {noun}!"))

})

}

Here are example executions:

awesome_rladies(1)

[1] "There is 1 awesome RLady!"awesome_rladies(1:2)

[1] "There is 1 awesome RLady!" "There are 2 awesome RLadies!"We discuss the following:

- Can we break this up to make it easier to test?

Note: Eventually, testing will likely end up as an exercise in refactoring your code - breaking it down such that the simplest elements each belong to a function that can be individually and independently tested.

What type of object should the function output?

What type of object does this function expect, can we put up guardrails so the user doesn’t send the wrong thing? How do we test those guardrails?

Rather than write out these details in this already long post, you may watch in the recording!

Question & Answer

This discussion is paraphrased from the workshop recording. It was commented throughout that there is not a right answer to most of this. 🤷

(7:20)What is your take on getting started with unit testing?

There is something about unit testing that sounds really scary, like it is something that real programmers do and regular people don’t do, but often times it is the opposite. When you get familiar with testing your own code, it is an easy way to combat others criticizing your work because you can look at your code and see its test coverage. This is standard across different languages and types of programming. What it means for your code to be correct is that it passes tests, so this can be a fairly objective way to defend your work.

(19:50)When do you write tests?

If the function writing is a process of discovery, and you are not sure what the function will do, write the test after the function is in a stable state. In other cases, when you know precisely how you want the function to behave, writing the test before you write the function could be a useful approach (test driven development).

(22:19)When I start writing tests, I get sucked into a rabbit hole of tests. How can I have a better process for writing tests?

Have solid tests for things that you really care about as a developer. Most of the time, it is good to write more tests than less. Get a couple of tests down, and as you discover bugs, make sure there is a test for every bug that you fix.

(23:53)Is it fair to say that you should consider problems that tripped you up when building the function as good test candidates?

Yeah, for sure! If you make the mistake as you are writing the function, you are likely to make the mistake again in six months, so a test will keep you honest on those problems.

(32:50)Can we writetest_that()statements with more descriptive errors?

Think of test_that() as a paragraph break to keep related expectations together (Gordon), and give the test a brief but evocative name - you do you (from the test_that() help file). There are also expect_error() and expect_warning() functions.

(37:00)How is the percent coverage fromcovr::report()calculated?

This evaluates which lines of code have been executed for that test. It is not necessarily evaluating if the function is tested well, but rather has this line of code ever been run by your test suite.

(38:10)You mentioned earlier that when you fix a bug, that is a good time to write a test. Do you have an example of doing this?

Yes! I develop and maintain packages for internal R users, and a common application of this for me is when a user calls the function in a reasonable way and gets a really unfriendly error message. I resolve this through writing a better error message for the function and then include that as a test with expect_error().

(39:27)How is running tests different than trying out different data types and sets on your function and debugging?

It is not different at all! What I have learned from being around other

talented programmers is that they don’t have amazing brains that they can hold all these different variables in. They are able to write good software by exporting the normal checks that everyone does when writing functions into a formal set of expectations that you can get a computer to run. Testing is about getting that stuff out of your brain so that a computer can execute it.

(53:50)Do you have advice on how to choose the data to feed into the expected object?

You can choose data from base R to minimize package dependencies, write small in-line data, or use your own specific data that you add to your package. Also consider data that get to the extreme corners of your function (e.g., missing data, weird values). “Throw lizards at your function!”

(55:57)Do you commonly seetest_that()used against a script instead of a function?

You can use expect_equal() in scripts, but there are packages like {assertr} which might be more appropriate for R scripts.

(1:09:38)Regarding dependencies, what do you consider when you are developing a package?

This depends on the developer and user. For internal things, feel free to add as many dependencies as you are comfortable with. Base R can cover a lot of the functionality introduced with your dependencies. It depends on how much work you want to do to support the dependencies versus what you are getting out of it. You can also consider pulling in a single function from a package rather than declaring an entire package as a dependency.

(1:34:55)Does the{testthat}framework work for shiny apps?

I recommend putting as much as logic as you can into functions that live outside of the app, and then you can use test_that() on those functions. If you are doing tests that involve reactivity in shiny apps, then you need to use {shinytest}.

Personal reflection

When curating for @WeAreRLadies on Twitter in February 2021, I asked if there were any volunteers for a workshop on unit testing, and Gordon Shotwell replied affirmatively! 🙋 At that point, we were complete strangers who had never personally interacted.

Despite having no experience with unit testing, after a bit of conversation and much encouragement from both R-Ladies Philly and Gordon, I agreed to develop a workshop with Gordon’s support. (Why not? Teaching is the best way for me to learn. 😊)

In small and infrequent time chunks reading and tinkering, three 30 minute meetings with Gordon, and a few chat exchanges, I learned so much about unit testing between February and November! And I was so glad to be able to give back and share that knowledge (and confidence!💪) with R-Ladies Philly.

I also really liked the mentor-mentee relationship modeled in this workshop - I think it made the material approachable for beginners and elevated for those more experienced. It also put me at ease during workshop preparation knowing that Gordon could respond to questions that I likely wouldn’t have had experience with. It is a workshop format I highly recommend trying.

Acknowledgements

Thank you very much to R-Ladies Philly for hosting this workshop. In particular, Alice Walsh provided feedback on workshop materials and this blog post. In addition, many thanks to Gordon Shotwell for volunteering his time and expertise to our learning. 💜